Note

Go to the end to download the full example code or to run this example in your browser via Binder

HPO - Grid Search¶

Experiment initialization and data preparation

from piml import Experiment

from piml.models import XGB2Regressor

exp = Experiment()

exp.data_loader(data="BikeSharing", silent=True)

exp.data_summary(feature_exclude=["yr", "mnth", "temp"], silent=True)

exp.data_prepare(target="cnt", task_type="regression", silent=True)

Train Model

exp.model_train(model=XGB2Regressor(), name="XGB2")

Define hyperparameter search space for grid search

parameters = {'n_estimators': [100, 300, 500],

'eta': [0.1, 0.3, 0.5],

'reg_lambda': [0.0, 0.5, 1.0],

'reg_alpha': [0.0, 0.5, 1.0]

}

Tune hyperparameters of registered models

result = exp.model_tune("XGB2", method="grid", parameters=parameters, metric=['MSE', 'MAE'], test_ratio=0.2)

result.data

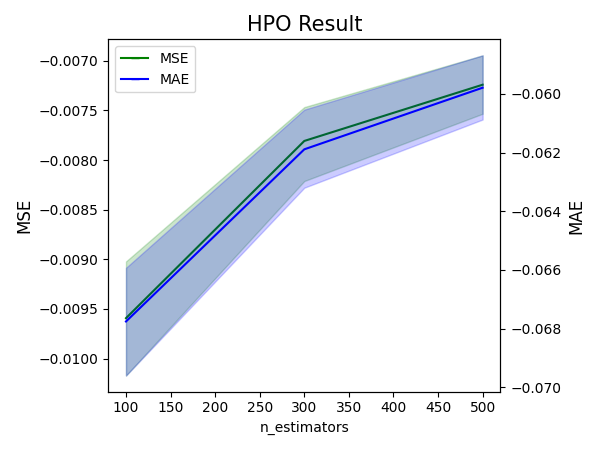

Show hyperparameter result plot

fig = result.plot(param='n_estimators', figsize=(6, 4.5))

Refit model using a selected hyperparameter

params = result.get_params_ranks(rank=1)

exp.model_train(XGB2Regressor(**params), name="XGB2-HPO-GridSearch")

Compare the default model and HPO refitted model

exp.model_diagnose("XGB2", show="accuracy_table")

MSE MAE R2

Train 0.0090 0.0669 0.7382

Test 0.0095 0.0688 0.7287

Gap 0.0005 0.0019 -0.0095

Compare the default model and HPO refitted model

exp.model_diagnose("XGB2-HPO-GridSearch", show="accuracy_table")

MSE MAE R2

Train 0.0057 0.0535 0.8346

Test 0.0063 0.0559 0.8193

Gap 0.0006 0.0024 -0.0153

Total running time of the script: ( 3 minutes 52.724 seconds)

Estimated memory usage: 31 MB