Note

Go to the end to download the full example code or to run this example in your browser via Binder

XGB-2 Classification (Taiwan Credit)¶

Experiment initialization and data preparation

from piml import Experiment

from piml.models import XGB2Classifier

exp = Experiment()

exp.data_loader(data="TaiwanCredit", silent=True)

exp.data_summary(feature_exclude=["LIMIT_BAL", "SEX", "EDUCATION", "MARRIAGE", "AGE"], silent=True)

exp.data_prepare(target="FlagDefault", task_type="classification", silent=True)

Train Model

exp.model_train(model=XGB2Classifier(), name='XGB2')

# Train Model with monotonicity constraints on PAY_1

exp.model_train(model=XGB2Classifier(mono_increasing_list=("PAY_1", )), name="Mono-XGB2")

Evaluate predictive performance of XGB2

exp.model_diagnose(model='XGB2', show='accuracy_table')

ACC AUC F1 LogLoss Brier

Train 0.8219 0.7978 0.4759 0.4196 0.1316

Test 0.8290 0.7728 0.4797 0.4252 0.1319

Gap 0.0071 -0.0251 0.0038 0.0057 0.0004

Evaluate predictive performance of Mono-XGB2

exp.model_diagnose(model='Mono-XGB2', show='accuracy_table')

ACC AUC F1 LogLoss Brier

Train 0.8216 0.7968 0.4780 0.4202 0.1318

Test 0.8288 0.7739 0.4816 0.4249 0.1320

Gap 0.0072 -0.0229 0.0036 0.0047 0.0001

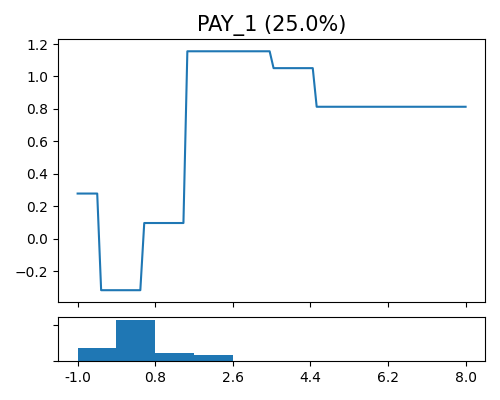

Global effect plot for PAY_1 of XGB2

exp.model_interpret(model='XGB2', show="global_effect_plot", uni_feature="PAY_1", original_scale=True, figsize=(5, 4))

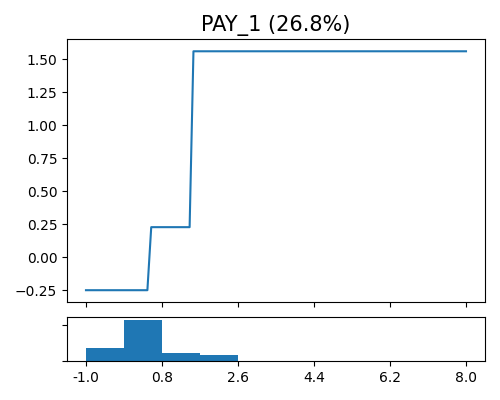

Global effect plot for PAY_1 of Mono-XGB2

exp.model_interpret(model='Mono-XGB2', show="global_effect_plot", uni_feature="PAY_1", original_scale=True, figsize=(5, 4))

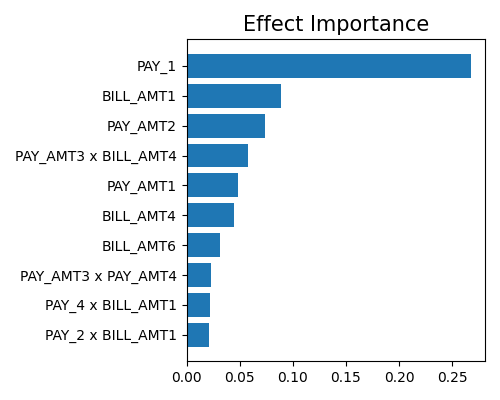

Effect importance

exp.model_interpret(model='Mono-XGB2', show="global_ei", figsize=(5, 4))

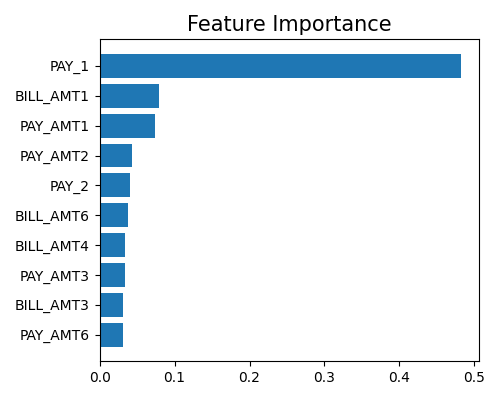

Feature importance

exp.model_interpret(model='Mono-XGB2', show="global_fi", figsize=(5, 4))

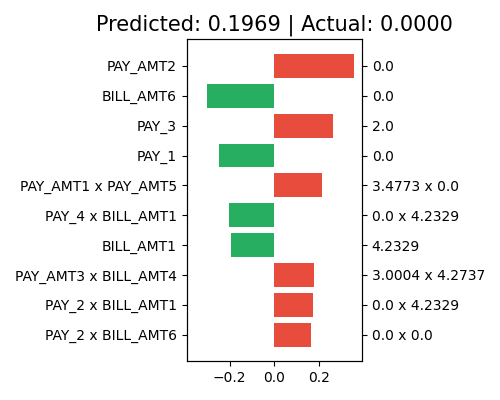

Local interpretation by effect

exp.model_interpret(model='Mono-XGB2', show="local_ei", sample_id=0, original_scale=True, figsize=(5, 4))

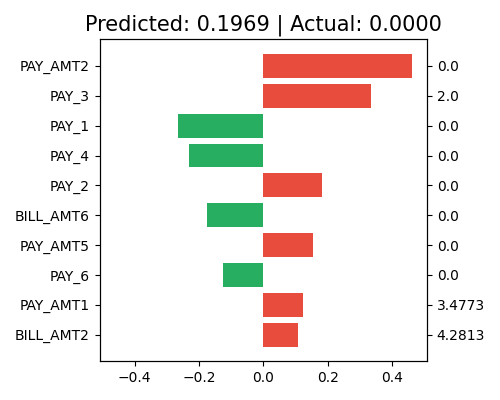

Local interpretation by feature

exp.model_interpret(model='Mono-XGB2', show="local_fi", sample_id=0, original_scale=True, figsize=(5, 4))

Total running time of the script: (0 minutes 5.422 seconds)