piml.data.outlier_detection.IsolationForest¶

- class piml.data.outlier_detection.IsolationForest(n_estimators=100, max_samples='auto', max_features=1.0, bootstrap=False, n_jobs=None, random_state=None, verbose=0, warm_start=False, standardization=True)¶

A wrapper of sklearn’s Isolation Forest for outlier detection.

Return the anomaly score of each sample using the IsolationForest algorithm

The IsolationForest ‘isolates’ observations by randomly selecting a feature and then randomly selecting a split value between the maximum and minimum values of the selected feature.

Since recursive partitioning can be represented by a tree structure, the number of splittings required to isolate a sample is equivalent to the path length from the root node to the terminating node.

This path length, averaged over a forest of such random trees, is a measure of normality and our decision function.

Random partitioning produces noticeably shorter paths for anomalies. Hence, when a forest of random trees collectively produce shorter path lengths for particular samples, they are highly likely to be anomalies.

- Parameters:

- n_estimatorsint, default=100

The number of base estimators in the ensemble.

- max_samples“auto”, int or float, default=”auto”

- The number of samples to draw from X to train each base estimator.

If int, then draw

max_samplessamples.If float, then draw

max_samples * X.shape[0]samples.If “auto”, then

max_samples=min(256, n_samples).

If max_samples is larger than the number of samples provided, all samples will be used for all trees (no sampling).

- max_featuresint or float, default=1.0

The number of features to draw from X to train each base estimator.

If int, then draw

max_featuresfeatures.If float, then draw

max(1, int(max_features * n_features_in_))features.

Note: using a float number less than 1.0 or integer less than number of features will enable feature subsampling and leads to a longer runtime.

- bootstrapbool, default=False

If True, individual trees are fit on random subsets of the training data sampled with replacement. If False, sampling without replacement is performed.

- n_jobsint, default=None

The number of jobs to run in parallel for both

fitandpredict.Nonemeans 1 unless in ajoblib.parallel_backendcontext.-1means using all processors.- random_stateint, RandomState instance or None, default=None

Controls the pseudo-randomness of the selection of the feature and split values for each branching step and each tree in the forest.

Pass an int for reproducible results across multiple function calls.

- verboseint, default=0

Controls the verbosity of the tree building process.

- warm_startbool, default=False

When set to

True, reuse the solution of the previous call to fit and add more estimators to the ensemble, otherwise, just fit a whole new forest.- standardizationbool, default=True

Whether to standardize covariates before running the algorithm.

- Attributes:

- estimator_

~sklearn.tree.ExtraTreeRegressorinstance The child estimator template used to create the collection of fitted sub-estimators.

- base_estimator_ExtraTreeRegressor instance

The child estimator template used to create the collection of fitted sub-estimators.

- estimators_list of ExtraTreeRegressor instances

The collection of fitted sub-estimators.

- estimators_features_list of ndarray

The subset of drawn features for each base estimator.

- estimators_samples_list of ndarray

The subset of drawn samples (i.e., the in-bag samples) for each base estimator.

- max_samples_int

The actual number of samples.

- offset_float

Offset used to define the decision function from the raw scores. We have the relation:

decision_function = score_samples - offset_.offset_is defined as follows. When the contamination parameter is set to “auto”, the offset is equal to -0.5 as the scores of inliers are close to 0 and the scores of outliers are close to -1. When a contamination parameter different than “auto” is provided, the offset is defined in such a way we obtain the expected number of outliers (samples with decision function < 0) in training.- n_features_in_int

Number of features seen during

fit.- feature_names_in_ndarray of shape (

n_features_in_,) Names of features seen during

fit. Defined only whenXhas feature names that are all strings.

- estimator_

Methods

decision_function(X[, scale])Predict raw outliers score of X using the fitted detector.

fit(X[, y, sample_weight])Fit the model.

predict([X, scale, threshold])Predict raw outlier indicator.

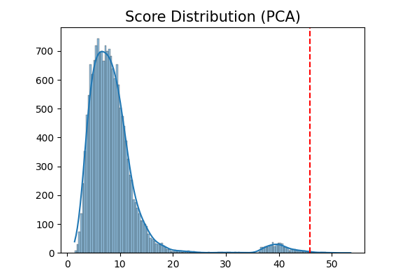

- decision_function(X, scale=True)¶

- Predict raw outliers score of X using the fitted detector.

For consistency, outliers are assigned with larger anomaly scores.

- Parameters:

- Xnumpy array of shape (n_samples, n_features)

The training input samples. Sparse matrices are accepted only if they are supported by the base estimator.

- scalebool, default=True

If True, scale X before calculating the outlier score.

- Returns:

- outlier_scoresnumpy array of shape (n_samples,)

The anomaly score of the input samples.

- fit(X, y=None, sample_weight=None)¶

Fit the model.

- Parameters:

- Xnp.ndarray of shape (n_samples, n_features)

Data features.

- ynp.ndarray of shape (n_samples,), default=None

Data response.

- sample_weightnp.ndarray of shape (n_samples, ), default=None

Sample weight.

- predict(X=None, scale=True, threshold=0.9)¶

Predict raw outlier indicator.

Normal samples are classified as 1 and outliers are classified as -1.

- Parameters:

- Xnumpy array of shape (n_samples, n_features)

The training input samples. Sparse matrices are accepted only if they are supported by the base estimator.

- scalebool, default=True

If True, scale X before calculating the outlier score.

- thresholdfloat, default=0.9

The quantile threshold of outliers. For example, the samples with outlier scores greater than 90% quantile of the whole sample will be classified as outliers.

- Returns:

- outlier_indicatornumpy array of shape (n_samples,)

The binary array indicating whether each sample is outlier.