piml.data.outlier_detection.KNN¶

- class piml.data.outlier_detection.KNN(n_neighbors=5, radius=1.0, algorithm='auto', leaf_size=30, metric='minkowski', p=2, metric_params=None, n_jobs=None, score_method='mean', standardization=True)¶

A wrapper of sklearn’s K-Nearest Neighbor-based for outlier detection.

- Parameters:

- n_neighborsint, default=5

Number of neighbors to use.

- radiusfloat, default=1.0

Range of parameter space to use.

- algorithm{‘auto’, ‘ball_tree’, ‘kd_tree’, ‘brute’}, default=’auto’

Algorithm used to compute the nearest neighbors:

‘ball_tree’ will use

BallTree‘kd_tree’ will use

KDTree‘brute’ will use a brute-force search.

‘auto’ will attempt to decide the most appropriate algorithm based on the values passed to

fitmethod.

Note: fitting on sparse input will override the setting of this parameter, using brute force.

- leaf_sizeint, default=30

Leaf size passed to BallTree or KDTree. This can affect the speed of the construction and query, as well as the memory required to store the tree. The optimal value depends on the nature of the problem.

- metricstr or callable, default=’minkowski’

Metric to use for distance computation. Default is “minkowski”, which results in the standard Euclidean distance when p = 2.

If metric is “precomputed”, X is assumed to be a distance matrix and must be square during fit. X may be a

sparse graph, in which case only “nonzero” elements may be considered neighbors.If metric is a callable function, it takes two arrays representing 1D vectors as inputs and must return one value indicating the distance between those vectors. This works for Scipy’s metrics, but is less efficient than passing the metric name as a string.

- pfloat, default=2

Parameter for the Minkowski metric from sklearn.metrics.pairwise.pairwise_distances. When p = 1, this is equivalent to using manhattan_distance (l1), and euclidean_distance (l2) for p = 2. For arbitrary p, minkowski_distance (l_p) is used.

- metric_paramsdict, default=None

Additional keyword arguments for the metric function.

- n_jobsint, default=None

The number of parallel jobs to run for neighbors search.

Nonemeans 1 and-1means using all processors.- score_method{‘mean’, ‘median’, ‘max’}, default=’mean’

The aggregation method for nearest neighbors.

‘mean’ will use the average distance of neighbors to the sample as outlier score.

‘median’ will use the median distance of neighbors to the sample as outlier score.

‘max’ will use the maximum distance of neighbors to the sample as outlier score.

- standardizationbool, default=True

Whether to standardize covariates before running the algorithm.

Methods

decision_function(X[, scale])Predict raw outliers score of X using the fitted detector.

fit(X)Fit the model.

predict([X, scale, threshold])Predict raw outlier indicator.

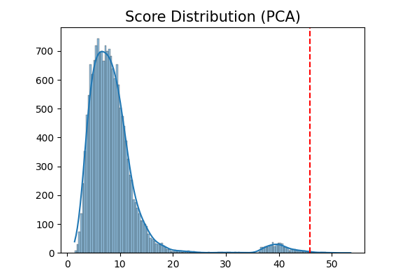

- decision_function(X, scale=True)¶

- Predict raw outliers score of X using the fitted detector.

For consistency, outliers are assigned with larger anomaly scores.

- Parameters:

- Xnumpy array of shape (n_samples, n_features)

The training input samples. Sparse matrices are accepted only if they are supported by the base estimator.

- scalebool, default=True

If True, scale X before calculating the outlier score.

- Returns:

- outlier_scoresnumpy array of shape (n_samples,)

The anomaly score of the input samples.

- fit(X)¶

Fit the model.

- Parameters:

- Xnp.ndarray of shape (n_samples, n_features)

Data features.

- predict(X=None, scale=True, threshold=0.9)¶

Predict raw outlier indicator.

Normal samples are classified as 1 and outliers are classified as -1.

- Parameters:

- Xnumpy array of shape (n_samples, n_features)

The training input samples. Sparse matrices are accepted only if they are supported by the base estimator.

- scalebool, default=True

If True, scale X before calculating the outlier score.

- thresholdfloat, default=0.9

The quantile threshold of outliers. For example, the samples with outlier scores greater than 90% quantile of the whole sample will be classified as outliers.

- Returns:

- outlier_indicatornumpy array of shape (n_samples,)

The binary array indicating whether each sample is outlier.